Time travel

If we go back to 2004, the goal was the same as today. To deliver features as fast as possible, with the minimum impact on the business.

We were working on a system composed by COBOL programs and a DB2 database inside iSeries. Each change was being tested by hand, with no integration tests. We were also making manual deployments for each change. This was of course prone to human errors!

With changes happening every day, and no appropriate control or automated process, the risk to cause an issue was high. Our chances to catch it before the customers was very low. Instead of helping the business, our approach was more likely to cause problems than provide the new features we needed.

Taking back control

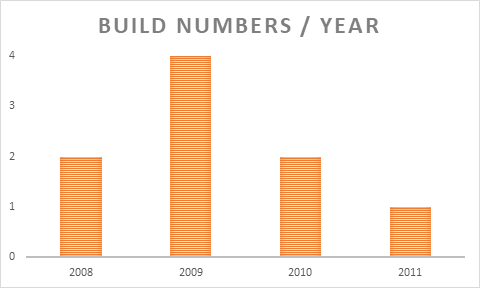

In 2008, while trying to reduce the issues caused by the necessary and requested business changes, we adopted a new solution.

Introduction of the Monolith “build”

Each “build” gathered all of our changes to the system into one big package. We would then deploy it per environment. Besides unit and integration testing, we also performed regression testing to make sure the system was still working. All of our tests were performed manually. We partially automated the deployments around 2010, with an internal tool for every change related to iSeries.

The issue we had with this approach was the time we took to have a final and deployable build. As a result, we ended up with a reduced number of production deployments. With a max of 4 a year at best.

Creation of Java GUI applications

In 2009 we had our first GUI tool written in Java, a basic CRM, being used in production. At the time, we kept a PC waiting in the corner of the room. Ready to be used to generate the WAR files we would deploy on each environment. The components that we were developing in Java were following SOA.

Starting to automate the tests

In 2011, we started using a new testing tool, Cerberus. An in-house solution, that is now open source. The tool was created to help with our integration testing and its automation. You can read more in this article here.

Our system was more stable, but we were still not satisfied with the number of deployments. Having all changes inside one build, meant that we were linking several ongoing projects. This meant that one could not advance without the other. This also meant the business had to wait longer to have a new feature. When it finally arrived in production, in some cases, it was no longer what was needed. This approach encouraged local and parallel developments outside of the main system, complexifying an already complex system.

Building momentum

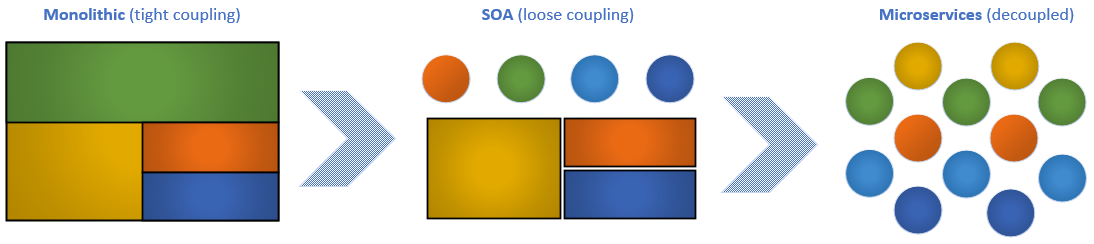

In order to be able to increase the number of deployments, with reduced dependencies, we needed to evolve our system. In other words, we needed to break the monolithic system down.

Jenkins adoption to build, test and eventually deploy components

Around 2013, we started using Jenkins and SonarQube, which was integrated with it, to validate and compile our components. The resulting release was then deployed manually in each environment.

Speed increase for moving out of COBOL

By the time 2014 came, we began to replace COBOL batch programs by Java batch components. We were automating the integration tests and adding more. So, every time we delivered a new change, a batch of tests was executed, by request, to make sure all was working as intended.

We started to deploy Java components from Jenkins, through Cerberus application. We were able to increase the number of deployments but we were still being held back by the legacy system. Meaning that it was still difficult to avoid dependencies between deployments, and projects.

Speeding up

Simplifying the system by splitting it functionally

In 2016, we started to split the system by business area. Each area could have their own changes delivered to production independently and faster. We were testing the changes individually and then integrating them on the system to guarantee no regression was detected.

We started to build more and more components in Java, closer to a microservices approach but still bigger in granularity. These components were already created with a specific business area in mind.

Implementation of CI/CD

In 2017, we began to discuss the concept of CI / CD. Starting on 2018, we moved from paper to reality.

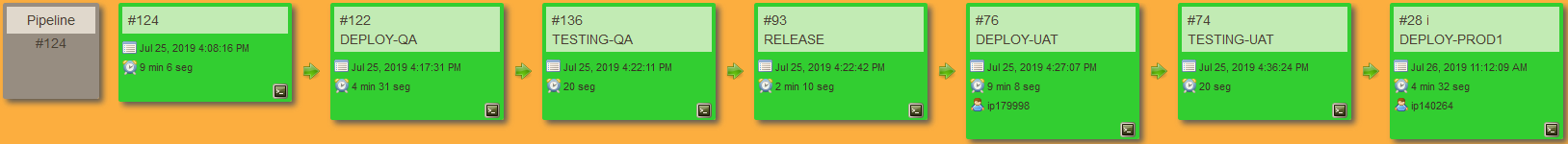

When we commit a change to the source code repository (SVN), it is picked up by Jenkins. Jenkins will than build the component. Upon a successful build, the component is automatically deployed to the qualification environment. A test campaign is executed next, by Cerberus, to confirm that everything is working as expected. Finally, if all goes well, a release is created and uploaded to the artifacts repository.

The deployment to UAT may or may not be automatic according to the level of the component criticality. As before, a test campaign is executed next, since we’ve set the pipeline to automatically trigger the UAT tests after a successful UAT deployment.

All our production deployments are triggered manually since we have a rule that a GO from the business is mandatory before any installation.

The number of production deployments per day is increasing, and our goal is to reach 100 a day.

Cloud native preparation

By the end of 2018, we adopted a cloud native architecture, to make the system more agile and more resilient, and the event driven architecture was introduced. In 2019 we had a GO to develop our first critical component using this approach.

Next Steps

A few years ago, at La Redoute, we already had the right idea! To deliver business features as fast as possible, with the minimum impact on the system. However, neither the infrastructure nor the system was prepared to support that. We were able to transform that:

Our journey was not completed in 2019 and we will continue to evolve the system throughout the next years. Now, sky is the limit and migrating to the cloud is on our horizon!