Introduction

The LaRedoute.com portal is one of the main drivers of international activity for La Redoute and contributes significantly to the brand’s international exposure.

The success of LaRedoute.com depends heavily on its accessibility by a worldwide audience. Multi-lingual capabilities are therefore considered a crucial feature.

The first and most obvious advantage of having multi-lingual capabilities on LaRedoute.com is increased reach. Translating the site into multiple languages makes the content and products accessible to an audience that does not speak the site’s origin language. This opens the site to a larger potential customer base, which can lead to increased sales and revenue.

Another advantage is improved user experience and customer satisfaction. By making the site available in a user’s native language, it is easier for users to understand the content, navigate the site, and make accurate purchases on LaRedoute.com.

Multi-lingual capabilities can also help to improve search engine optimisation (SEO) and increase visibility of LaRedoute.com in search engines. With the site translated into multiple languages, it can rank higher in search engines for relevant keywords in those languages, driving more qualified traffic from organic search activity.

Nonetheless, multi-lingual capabilities bring an element of complexity. For an e-Commerce site like LaRedoute.com, the overheads of multi-lingual capabilities are significant and wide-ranging. For example, it must always be ensured that the product catalogue is accurately translated into all the supported languages. Similarly, all the customer animation material must be available in all the supported languages. This includes both perishable and non-perishable content such as advertisement banners, promotional information, as well as informative landing pages.

Therefore, even though multi-lingual capabilities may be technically available, making a site available in multiple languages requires a careful cost-benefit analysis exercise. Although some metrics may help, this remains somewhat of a chicken-and-egg problem: how do we calculate the additional revenue that a new language will drive through the site before we incur the costs of translating all the content and decide that the benefits really outweigh the costs?

Problem Domain

LaRedoute.com natively supports three languages: English, French and German. The content for these three languages is curated, with significant and continuous effort spent by both product and content management teams.

Existing metrics and analytics showed potential benefit from having LaRedoute.com available in an additional ten (10) languages in the immediate term. At the same time, it was not guaranteed that each of these ten languages would bring sufficient incremental business to justify the efforts of several teams that would be required to launch and maintain a manually curated native language in the traditional way.

Project Scope

This project was therefore spawned with the goal of improving the outreach of LaRedoute.com by making the portal available in several other non-native languages, in a manner that could efficiently test the return on investment (ROI) hypothesis that analytical data could suggest.

In this context, five main objectives were defined.

- Decrease the time to market, effort, and cost to launch non-native languages on LaRedoute.com.

This would satisfy the need to test a hypothesis cost-effectively, in the immediate term. - Achieve a zero-effort maintenance procedure for non-native languages.

This would unlock the possibility of vastly increasing the languages available on LaRedoute.com without significant and continuous language curation efforts. - Restrict the scope of non-native languages to the web user experience.

The web user experience was considered to have sufficient footprint to validate whether a particular non-native language warranted the development and maintenance effort to become a native language. Thus, other user experiences, such as native mobile applications and email communication, were excluded from the project scope. - Translate the web user experience in a transparent manner to the source system.

The web user experience on LaRedoute.com is powered by different digital systems, all of which are multi-lingual and aware of native languages. Decoupling a non-native language from these digital systems was desirable ideally, launching a non-native language would have zero impact on existing digital systems. - Optimise the user journey for non-native languages along the main key performance indicators (KPIs)

Response times and content quality are generally considered crucial for a buyer’s user experience [1]. Therefore, we wanted to find the right balance between optimising along these two KPIs, without impinging on our ability to align to the rest of the objectives set out for this project.

Approach

It was evident from the outset that introducing a significant degree of automation to alleviate most of the inherent overheads of managing multi-lingual capabilities on LaRedoute.com was the only way in which the project scope could be satisfied. Research tackling a similar problem domain [1] shows that machine translation technology is an effective tool.

We also decided to build, rather than buy, a solution for our problem. Whilst several software-as-a-service (SASS) solutions exist, some of which deliver advanced functionality that goes even beyond our desired scope, we considered that our problem domain and scope were sufficiently particular to benefit from a custom-built solution.

Architecture

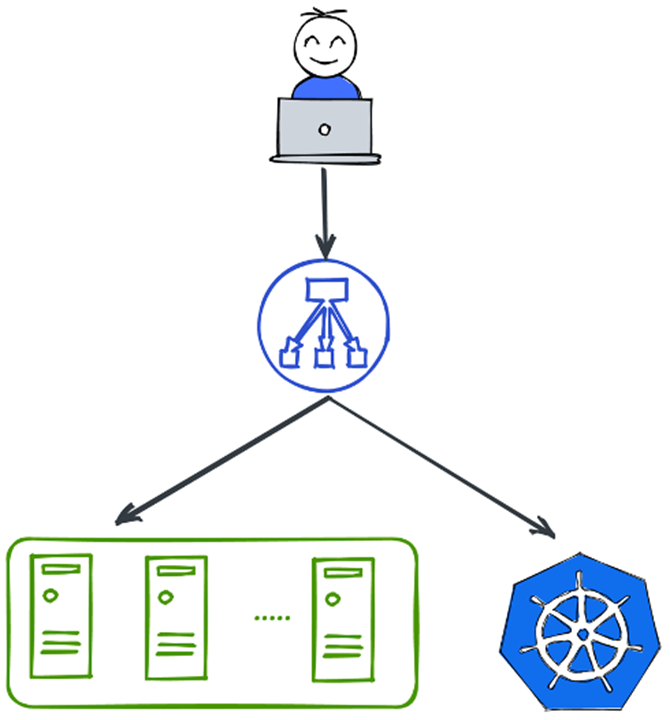

Figure 1 illustrates at a very high-level part of the existing customer-facing architecture of La Redoute’s digital web platform which serves the native languages on LaRedoute.com

A user’s request (e.g., a visit to a page on LaRedoute.com) arrives on a load balancer. The load balancer uses a rule-based configuration to forward the request to a target application. Presently, the load balancer is backed by a heterogeneous set of applications. In turn, these usea heterogeneous infrastructure, comprising both infrastructure-as-a-service (IASS) and container-as-a-service (CAAS) configurations.

Figure 1 – High-Level Architectural diagram of La Redoute digital web platform

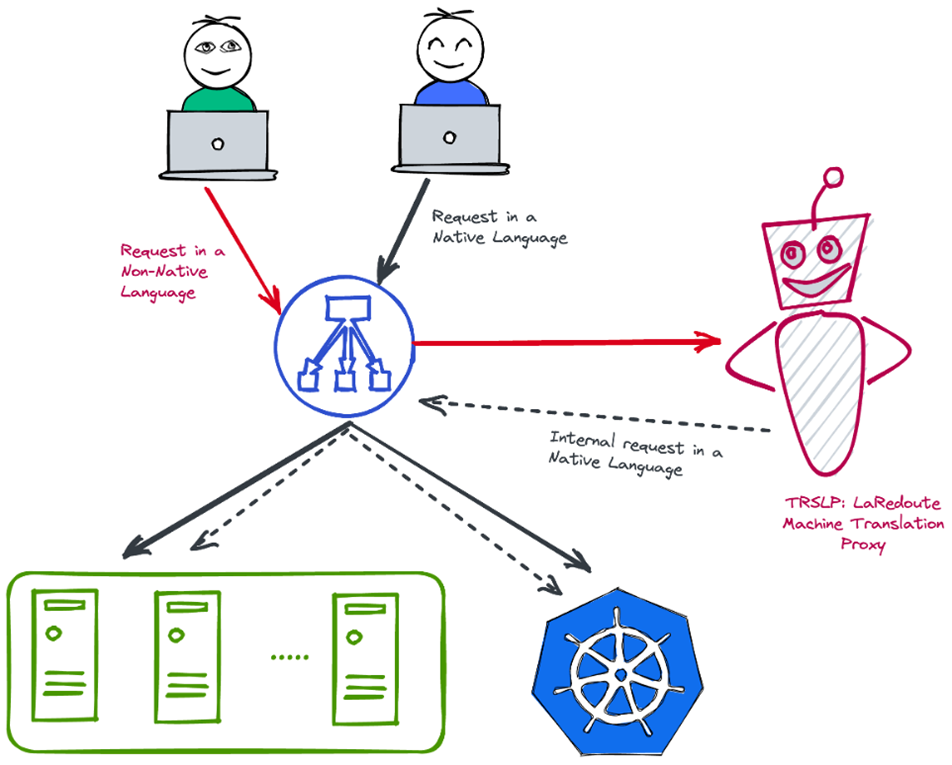

Figure 2 illustrates at a high-level our design for an architecture that introduces new non-native languages based on machine translation.

The architecture is based on a proxy approach, which is a technique that can be used to manipulate the response from a web application before the user receives it, and to manipulate the request from a user before it is received by the target application. This technique is well documented in similar as well as orthogonal use cases such as web personalisation [2] and user activity tracking [3], whilst also being supported by a short black-box analysis of existing SASS solutions for website machine translation.

Figure 2: High-Level Target Architecture for non-native languages on LaRedoute.com

Technical Stack

The La Redoute Way

As the old saying goes, “a dwarf standing on the shoulders of a giant may see farther than a giant himself”. This project builds on the solid architectural and technical foundations that La Redoute have built and evolved over the years, as well as the best practices that are today driving even the most complex digital systems within La Redoute.

These include the use of .NET technology to build cloud-native applications that can be containerised [4] and deployed in Kubernetes [5], using GitLab for the distributed version control and continuous integration and continuous deployment (CI/CD) solution of choice [6].

Google Translate for Machine Translation

Building a machine translation service was certainly out of our scope. Fortunately, several such services are available as SASS products. Fairly recent studies [7] show that Google Translate, Google’s machine translation technology, is one of the most widely used machine translation products.

Google Translate employs statistical methods and neural machine translation models trained on large parallel corpora to produce translations which, albeit not guaranteed to be perfect, were considered of a sufficiently high quality for our use case.

The Google Translate service is programmatically available via REST APIs that can either be accessed directly, or through SDKs, which exist for most mainstream programming languages. The Google Translate API exposes two endpoints:

- The TranslateText endpoint, which accepts an HTML snippet that fits in a maximum of 200KB and returns the translated equivalent in near-real time.

- The BatchTranslate endpoint, which is suitable for asynchronous translation in bulk of batches of documents in several formats, including HTML.

Redis as a Distributed Cache

Google Translate performs machine translation efficiently, and at an acceptable pay-per-character cost. Nonetheless, it was considered unfeasible to perform machine translation for every request that uses a non-native language of La Redoute.com. Furthermore, the result of an automatic translation operation is expected to remain largely static over time, and thus the process lends itself very well to effective caching.

On the other hand, LaRedoute.com is a large website, with many pages containing a substantial amount of text that would be translated, with the result of the translation operation being cached.

Redis was chosen as a distributed cache that could bring our implementation closer to the objectives. This database management system (DBMS) comes with several useful features:

- Being an in-memory DBMS, Redis is inherently fast for both READ and WRITE operations.

- Being a distributed DBMS, Redis can be scaled horizontally to store large data sets and handle many operations across several machines if necessary.

- Being an eventually consistent DBMS, Redis is partition tolerant and can be deployed in a highly available (yet, still performant) configuration for resiliency.

In effect, Redis operations are much faster than performing machine translation, and this aligns with our requirement of optimising the performance KPI. Reading results from a cache is also effectively free (besides the cost of running the distributed cache), and so using a distributed cache aligns with our requirements of producing a cost-effective solution . At the same time, since Redis is scalable and highly available, it does not represent a single point of failure (SPOF) that would be detrimental to our solution.

YARP as a programmable reverse proxy

YARP (Yet Another Reverse Proxy) is a .NET library designed to facilitate the development of high-performance, production-ready reverse proxy servers which are extensively customisable [8].

Effectively, YARP allows a .NET application to listen for incoming user requests and, either through configuration or customisations, transparently transform the request, forward it to other systems, and finally transform the response before sending it to the user. A core function of YARP is the availability of transforms. A request transform changes an HTTP request before sending it to a source system (e.g., add an HTTP header, or add a query string parameter). A response transform changes the HTTP response before sending it to the user. YARP comes with several transforms out of the box, and it also allows custom transforms to be developed.

YARP was therefore deemed to be a perfect fit to develop an application that adopts the proxy approach and delivers the objectives of this project.

Implementation

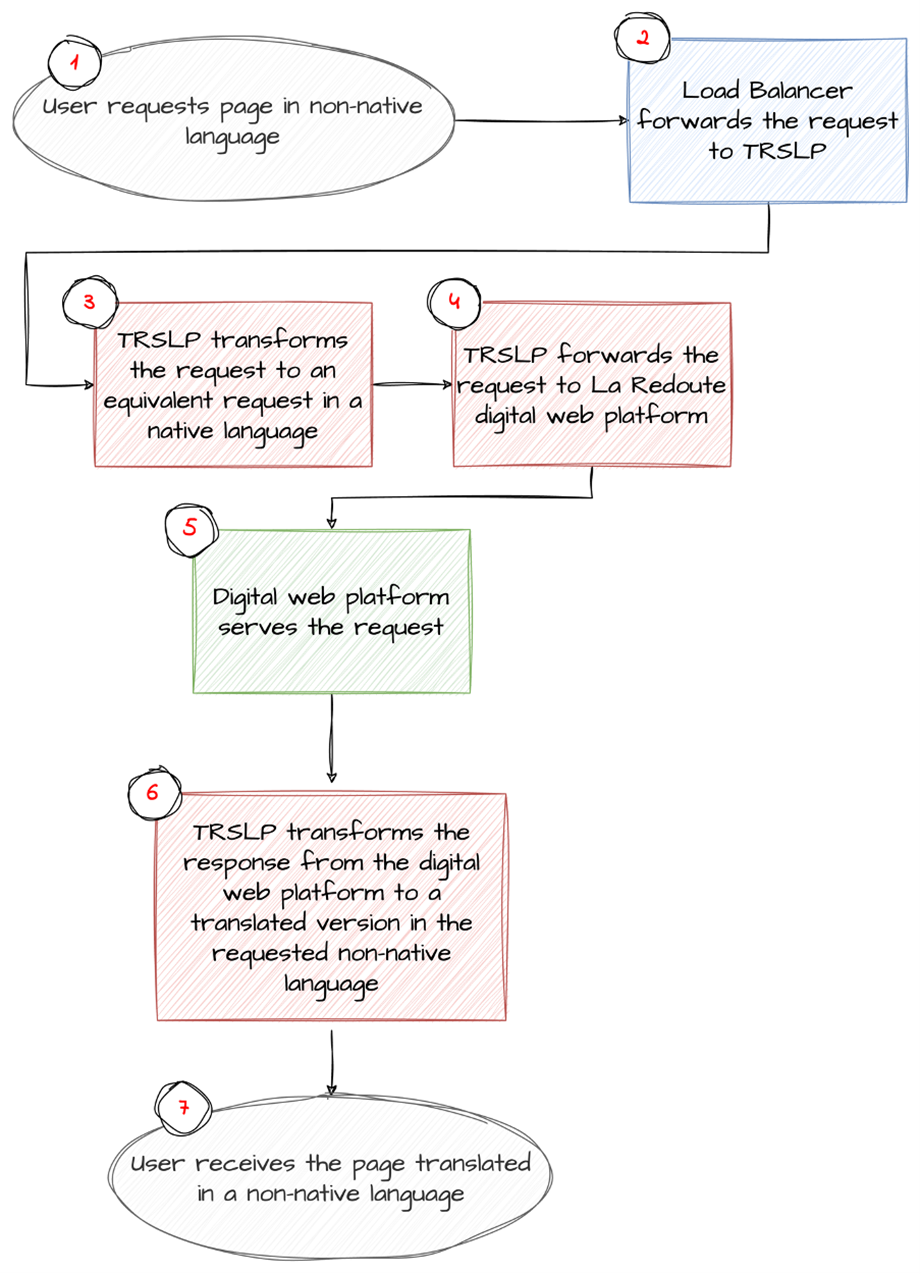

Figure 3 gives a high-level overview of the workflow used to serve a user request in a non-native language.

Figure 3: Translation Proxy Workflow

Response Transformation

The core of the translation logic is executed at step 7 of Figure 3. Here, a custom YARP response transformation performs the following steps:

- Parse the HTML

- At this stage, the HTMLAgilityPack HTML parser is used.

- Extract all text fragments

– These are heuristically identified as the text within HTML elements, @placeholder attributes and @data-text - For every unique text fragment F:

- Get the translation of F from Redis.

- If no translation for F exists, call Google Translate API and cache the result.

- Replace all occurrences of F with its translation in the original HTML document.

The above approach was primarily built to work around existing limitations of the Google Translate API. In practice, pages on LaRedoute.com can exceed the 200KB limit of the synchronous Google Translate endpoint, whilst the response time of the batch Google Translate endpoint is in the order of seconds and is therefore unsuitable for on-the-fly page translation. Effectively, by identifying text snippets in the HTML page, and sending only those for translation, we could parallelise the translation of several parts of the page using the synchronous (i.e., near real-time) Google Translate endpoint, each of which never exceed the 200KB limit imposed by the API.

This approach also serves to optimise the caching mechanism. By reducing the granularity of the cache entries to the text fragment level, rather than caching at the page level, the cache hit ratio improves as:

- A cached translation of the same text snippet appearing on different pages is re-used.

- A change in one or a few parts of a page’s text does not require the translation of the whole page, but only of the changed parts.

Request Transformation

Besides translating the response, the TRSLP module also incorporates a smaller custom YARP request transform. Effectively, we wanted to allow users of non-native languages to use the product search function on LaRedoute.com in their language of choice.

Therefore, for HTTP requests that represent a product search, our custom YARP request transform identifies the search query, and translates it (always using the Google Translate API) to the native language, before proxying the request to the digital web platform. This process is represented in Step 3 of Figure 3.

Results

The TRSLP module was deployed in production and effectively introduced ten new languages on LaRedoute.com. Analysis of metrics from these non-native languages show encouraging results.

There is a minority of users that change from a non-native language to a native language on LaRedoute.com, which indicates that users of a non-native language find the quality and speed of the translated pages adequate. Initial metrics also show that users can convert and finalise transactions using non-native languages.

Redis cache also proved to be highly effective. The system achieves a cache hit ratio of more than 99%, and therefore translations are found in the cache more than 99% of the time. Furthermore, by increasing the time-to-live (TTL) of Redis cache entries, we could observe a significant reduction in the cost of the Google Translate service, further highlighting the importance of the distributed cache layer in our setup.

Going Forward

Our solution is not without its limitations. For example, its scope is limited to the web-based user experience, and further limited to textual information. The end-to-end user experience is therefore not fully holistic: any images that contain text in the native language will remain untranslated, and any email communication (e.g., an order email confirmation) is also sent out using the native language .

Furthermore, the system does not provide any administration capabilities. These may become needed going forward: for example, administrators may wish to override some of the automatic translations by manual entry to improve the overall quality of the translated pages.

Nonetheless, we consider that the project achieved its initial scope and all the objectives set out.

LaRedoute.com has now expanded its footprint to ten new languages without incurring major development efforts or financial investment. At the same time, our approach is continuously producing new metrics that can funnel into an accurate cost-benefit analysis. It now remains to be seen which of the new non-native languages generate sufficient revenue to warrant the cost of their automatic translation, or even the much higher cost of becoming native languages. The new metrics may perhaps contradict the original ones and indicate that some of the languages that have been introduced in fact do not represent sufficient ROI to even deserve a presence on LaRedoute.com.

In conclusion, the La Redoute digital platform now incorporates a tool that allows new languages to be shipped with such minimal effort that inaccurate forecasts of a cost-benefit analysis are superfluous: new languages can simply be deployed, and their real effectiveness measured.

References

[1] J. Guha and C. Heger, “Machine translation for global e-commerce on eBay,” in Proceedings of the 11th Conference of the Association for Machine Translation in the Americas: MT Users Track, Vancouver, Canada: Association for Machine Translation in the Americas, Oct. 2014, pp. 31–37. [Online]. Available: https://aclanthology.org/2014.amta-users.3

[2] V. F. De Santana and M. C. C. Baranauskas, “Continuous web personalization using selector-template pairs,” in Proceedings of the 16th International Web for All Conference, 2019, pp. 1–9.

[3] R. Atterer, M. Wnuk, and A. Schmidt, “Knowing the user’s every move: user activity tracking for website usability evaluation and implicit interaction,” in Proceedings of the 15th international conference on World Wide Web, 2006, pp. 203–212.

[4] C. Bredeche, “Progressive delivery on a high traffic digital platform,” 2022. https://laredoute.io/blog/progressive-delivery-on-a-high-traffic-digital-platform/

[5] F. Evelette, “Centralize application deployment in K8S & its consequences,” 2022. https://laredoute.io/blog/centralize-application-deployment-in-k8s-its-consequences/

[6] P. Savoldelli, “Our Digital Front-End Is Now a Monorepo,” 2022. https://laredoute.io/blog/our-digital-front-end-is-now-a-monorepo/

[7] I. Rivera-Trigueros, “Machine translation systems and quality assessment: a systematic review,” Lang Resour Eval, vol. 56, no. 2, pp. 593–619, 2022.

[8] Microsoft, “YARP: Yet Another Reverse Proxy,” 2022. https://microsoft.github.io/reverse-proxy/