What was our first strategic event-driven microservices project

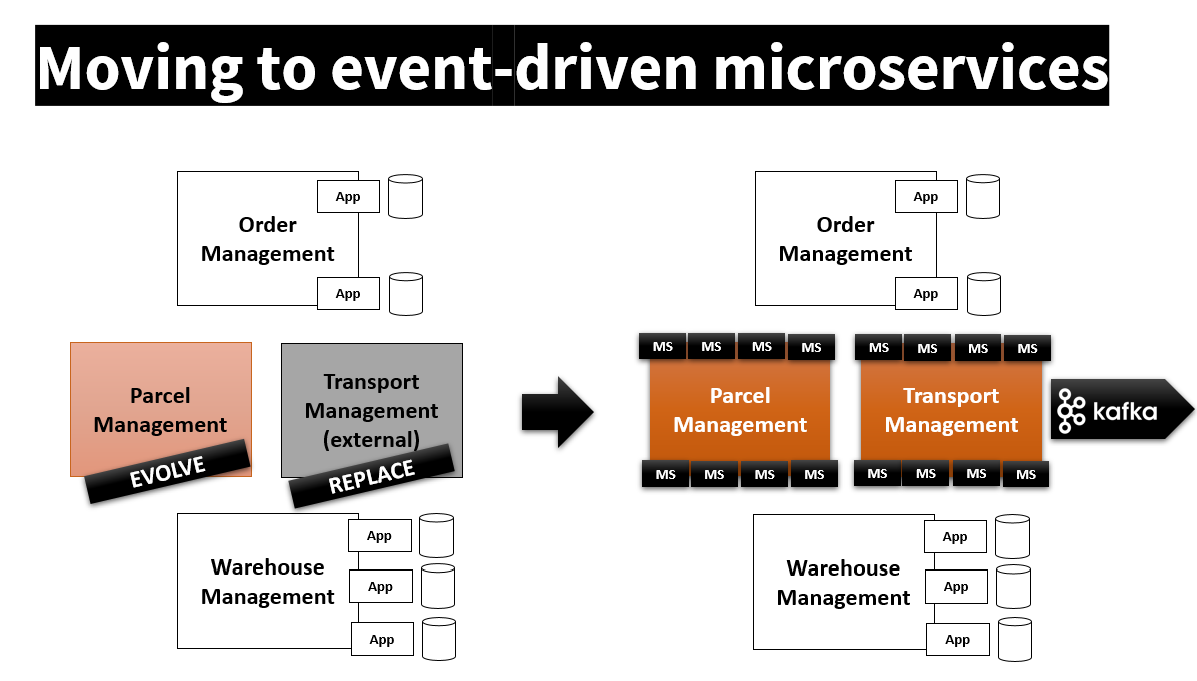

The project scope was at the heart of our back-office: The parcel and transportation management. The existing systems became a limiting business factor for various reasons.

Our goal was to provide more added-value and tailored delivery services for our customers. For example, let a customer receive his goods in a specific hour slot rather than waiting for hours.

From an internal perspective, it was also an opportunity to get near-real-time data processing, reporting, and observability. This would create the foundations for future data science use-cases.

Moving to an event-driven microservices architecture was a key decision to support the requirements of an incremental yet evolutive approach.

But it came with a series of changes from what we were used to.

A decentralized and decoupled architecture raised key testability questions

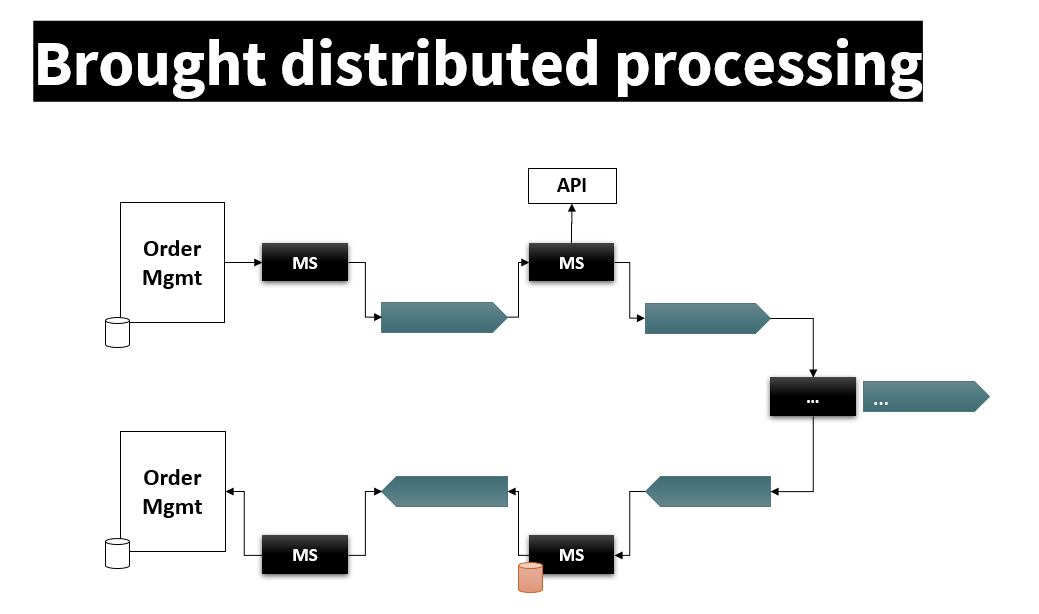

By nature, our components were independent modules technically and temporally decoupled via events from each other.

So, the first structural change was to distribute the processing in independent components. It raised the point to deal with testing the eventual consistency. Indeed, we were mainly used to synchronous business transactions.

Similarly, we had to question how to test the business functions with asynchronous processing. The model of request and response was not adequate.

Lastly, the events being the source of truth changed the focus in terms of test environment management (TEM) and test data management (TDM).

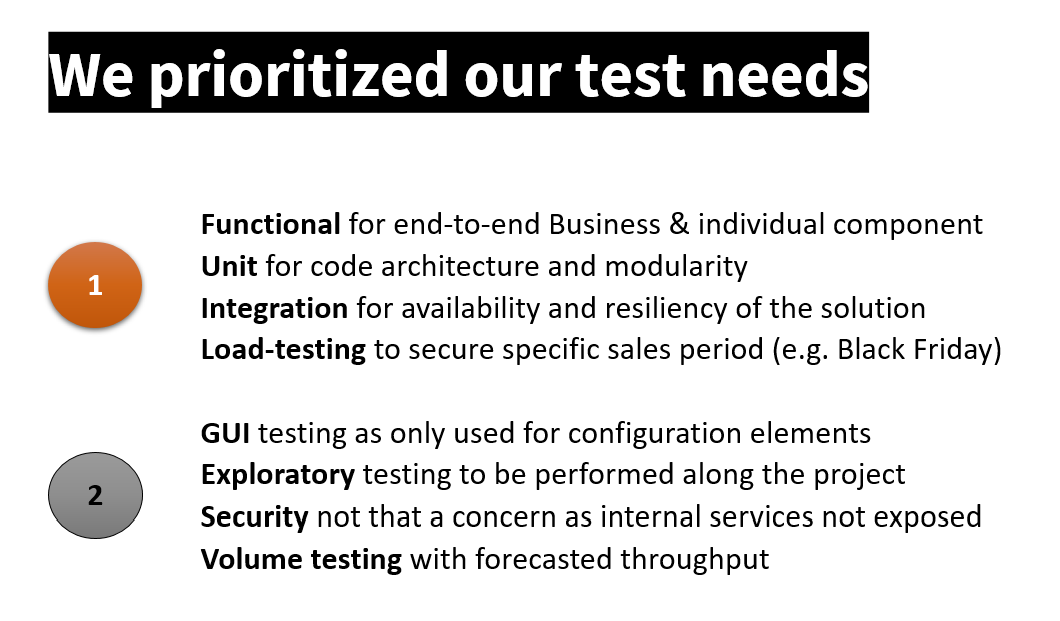

We started by defining and prioritizing our testing requirements

We were out of our comfort zone, with project delivery and real-life constraints to be considered. So, we started by organizing what had to be done.

Ensuring the business functionalities was a non-negotiable requirement for those core back-office components. We ranked as a priority one the functional testing of end-to-end and components.

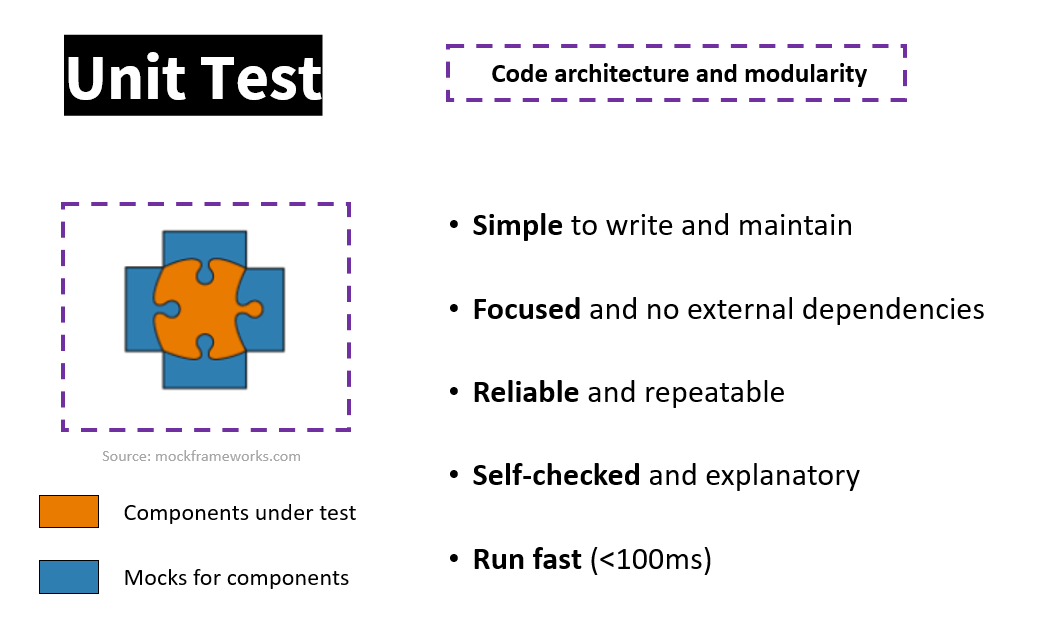

Each component had to provide an independent business function with the identified decoupling. So, we kept unit testing to ensure the code modularity.

The solution had also to be highly available and resilient. This was due to its business value and continuous operations requirement.

Our operations are subject to peaks of traffic due to business activity. Consequently, we retained specific performance tests to be executed.

From those priorities, we let aside graphical tests, exploratory or security testing. No major requirements were identified for internal and back-office processing.

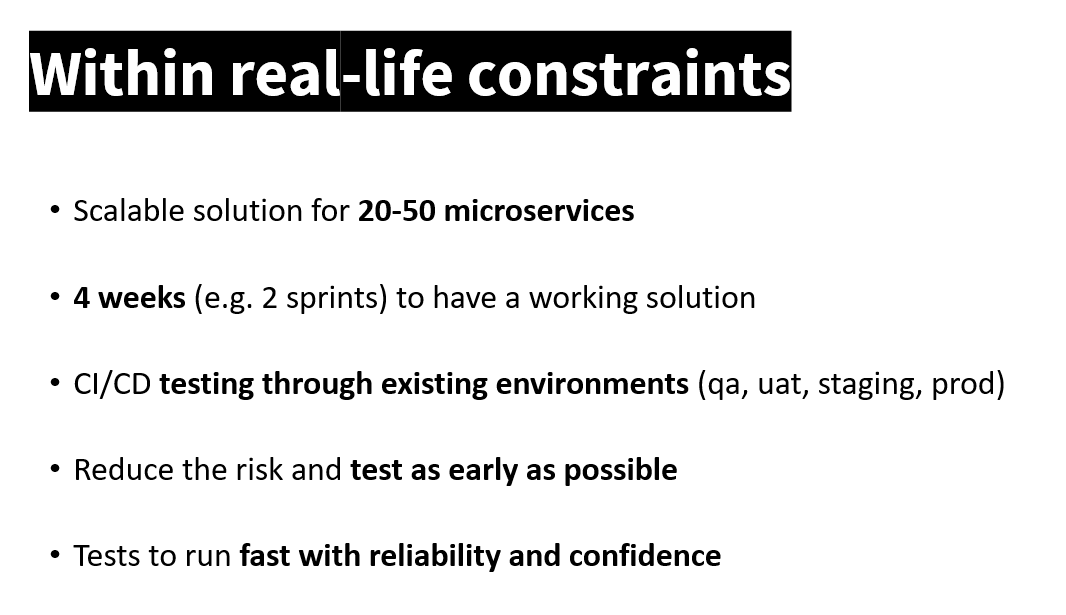

Our testing solution had to work under the project and real-life constraints

Identifying non-functional requirements and constraints were key for the test architecture.

We knew from the business analysis that we would deliver 20 to 50 microservices. The testing solution had to work for this number of components.

Working in an incremental and iterative approach, we had to perform the tests after the first sprints, in the following 2 to 4 weeks.

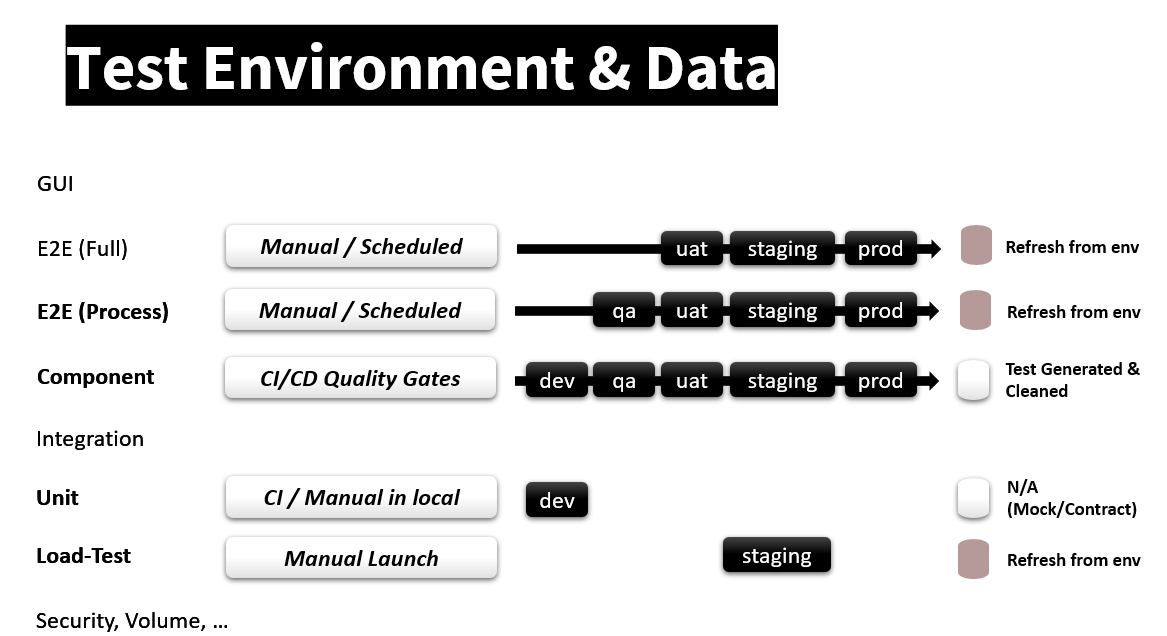

Our delivery pipeline was structured with CI/CD and quality gates through various environments. Every microservice would fit into this structure.

Overall, our tests had to provide confidence in our software releases. Thus, they had to be executed early in the process, run fast, and with reliability to provide a sustainable value.

How we Automated Functional Testing of Business Functions

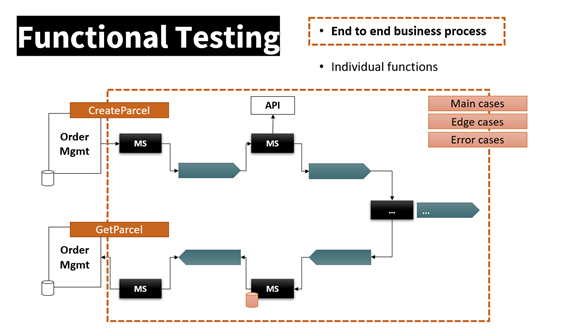

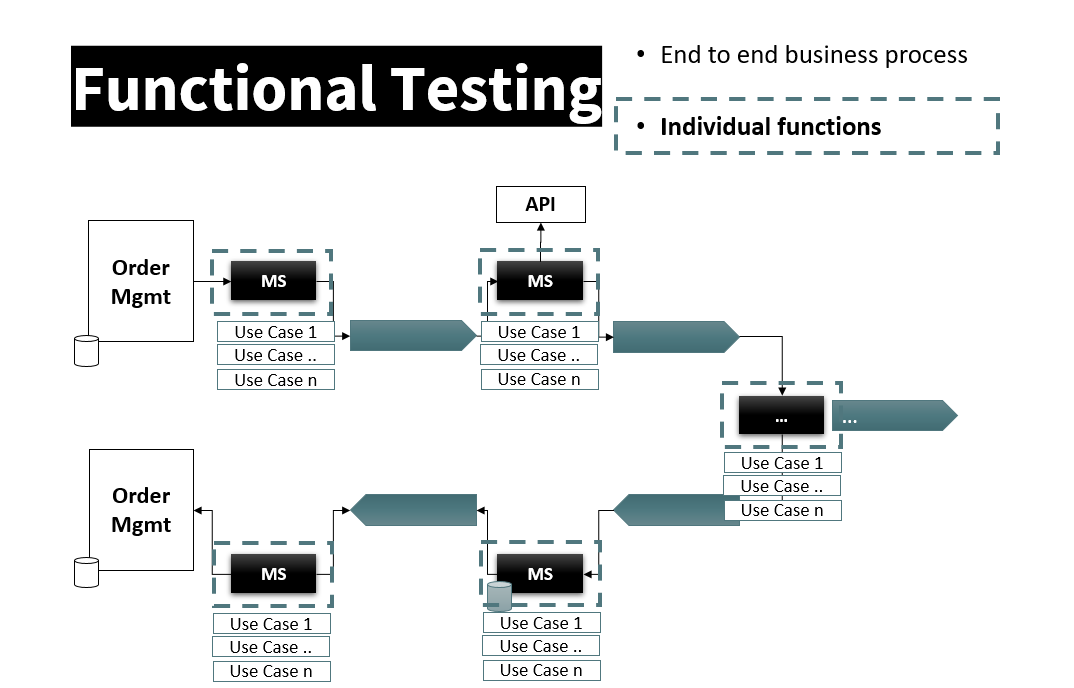

Our functional tests had to be performed at 2 levels, end-to-end and components. We first clarified the scope of each one, also avoiding a possible overlap.

The end-to-end functional test’s main goal was to guarantee asynchronous distributed processing. By nature, they are more complex, costly, and long to run. Therefore, we decided to focus on the major functional cases only.

On the contrary, components tests had to ensure the completeness of business functions. So, they perform the various use-cases limited to the input and output interface of each component. That way, the technical decoupling had also to be properly implemented.

Unit Test at Build time to ensure the code architecture

The main goal of our unit tests is to ensure the software code and modules are properly designed. For instance, we should be able to easily mock interfaces.

Unit tests are designed to be simple, focused, reliable, self-checked and fast to execute. They are done by the software developer, preferably before coding.

In our context, they are executed once at build time, before to package our artifacts. From there, the same software artifact is deployed through the various environments.

Integration Test only performed for non-standard cases

The software components were relying on two main platforms, Kubernetes (k8S) and Apache Kafka. In fact, we defined the tests by asking questions.

Do we need integration tests already having functional and unit tests? For exceptional specific cases, maybe, but not by default.

Do we need contract testing? When we call an external service not available or lacking a test environment, yes, probably. Else, we did not have to mock the interactions with our Kafka topics.

Is it the product team’s responsibility to test the Kafka and k8s platform availability and resiliency? Is it not already addressed by the Platform & SRE team? Indeed, it was. So we had to focus on the application layer design.

Do we need to test all cases of error management and retries? In fact, our functional components tests were performing the various use-cases including the edge cases. So, there was no need to perform them again.

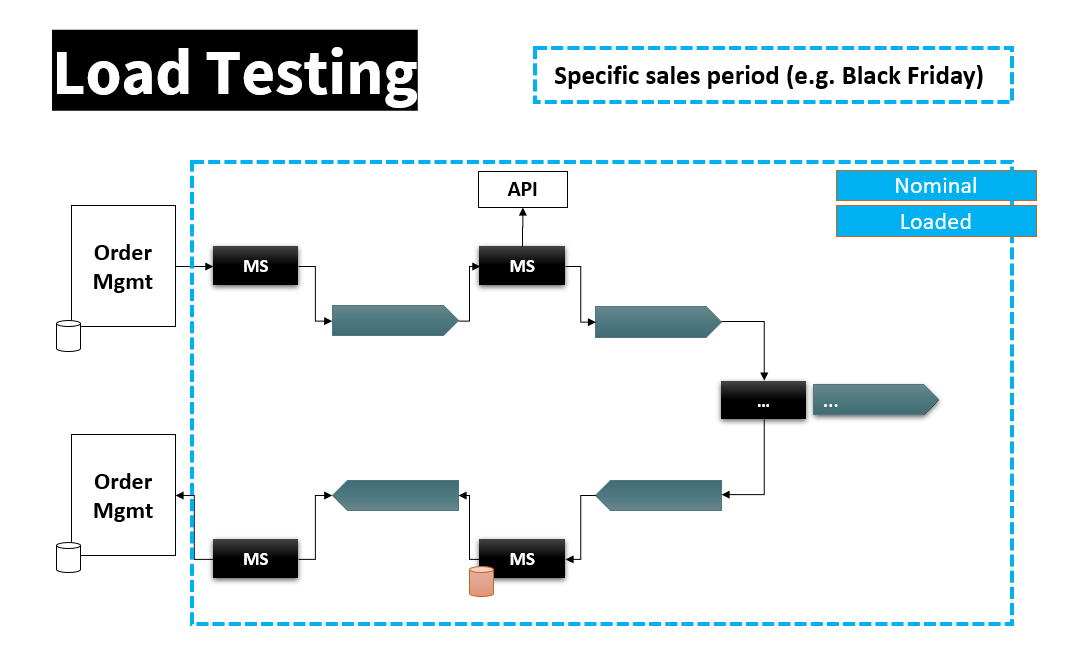

Load-tests were minimal to be confident under major known business peaks

Performance testing is a vast topic that covers a whole set of test techniques, from volume testing, spike testing to endurance testing.

Our main objective was to ensure the system would cope under known major business activity periods. In our case, a specific sales period generates an increased load in the system for the following days.

Consequently, we focused first on load-test under the possible identified volumes. That would bring confidence that we would support the major business periods.

We aligned on test environment and test data management strategies

The management of test environments and data is a requirement for reliable and consistent tests. We addressed their integration for each type of testing.

The end-to-end functional tests required coherent data across the systems. We decided to refresh them from production rather than managing a specific dataset, already having mechanisms in place.

Similarly, the load-test would simulate an increased business activity on the complete system. Therefore, we apply the same data refresh technique.

However, functional tests for each component were limited to the interfaces. We made each test creating and removing its own test data. That way, the tests were fast to execute, reliable, and not conflicting with the end-to-end tests.

Identically, the unit test required only test data localized inside the tests. Moreover, the usage of Mocks removes the need to have external data involved.

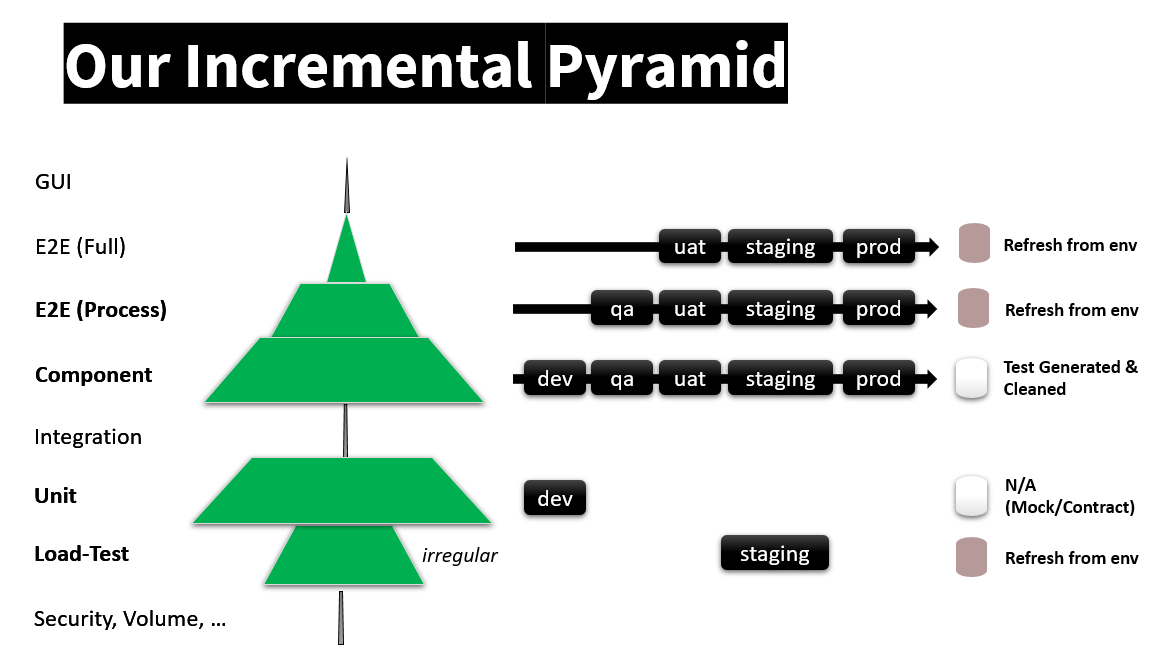

Our Test Pyramid is incremental and evolves with new capabilities

We manage to scale our test architecture for the 20-50 identified microservices and within the identified constraints.

Our pyramid is summarized in the following schema. It is not aligned with known patterns in agile contexts such as Test Pyramids or our Front-end Test Pyramid.

From our experience in the project, we identify key practices to keep and evolve. The first one is to design proper event-driven microservices. Indeed, done well, they do provide a significant value of reliability, performance, and testability.

We also want to apply decoupling to our tests for better reliability. We are looking at ephemeral environments for our component testing, separating them from the end-to-end ones.

Lastly, we want to move our performance test from a manual and irregular basis to a systematic and fully automated process. This would bring an earlier detection, better confidence, and scalability.

Our key takeaways from this strategic project experience

This strategic project was a real challenge for us, bringing questions to be delivered out of our comfort zone.

The test prioritization exercise aligned with our main goals was key at the beginning. It helped to focus the effort to deliver a working solution, rather than dispersing our energy.

Moreover, the test architecture to clarify their design, scope, and layers was a success factor. In fact, we avoided overlap, conflicting tests, or unnecessary maintenance.

The investment in automation in the right areas enables us to scale the approach. For example, the functional test automation in the CI/CD quality gates was very useful for any release.

In contrast, the load-test were more immature, so a manual approach was sufficient and probably better in this early stage.

Ultimately, we strongly recommended addressing quality as a product requirement for the whole project team. The business functions, software design, and its operations are intimately linked, so QA is an ever-transversal role!